In the past few years, artificial intelligence has advanced further in almost all aspects of our lives. A few of the areas that appear to be really gripped by this technology revolve around emotion detection, its integration with human-computer interaction (HCI), or human-computer communication, which refers broadly to any form of computer interaction and any interaction a human has with computing. The severe penetration of technology into every section of our daily lives makes understanding human emotion and reacting appropiately a vital path toward more intuitive, natural, and empathetic systems. This paper presents developments that lead to better use of artificial intelligence for emotion detection and their effects on HCI in the future.

The Evolution of Emotion Detection

Emotion detection, also known as affective computing, refers to the ability to detect the implications of human emotions through facial expressions, voice tones, gestures, and even physiological signals. The whole sector has developed much with the development of AI, especially concerning the machine learning and deep learning technologies wherein the computer could recognize the patterns and behaviors associated with different emotional states to bring us closer towards bridging the gap in human and machine communication.

Emotion could be detected fairly simple rule-based systems in the past. The system manies of times could not catch up with the complexity involved in human feelings based on some predeterminated rules. But data is deep on emotion detection today with the power of AI. Thus, machines learn from exams and generally interpret human feelings with sophistication.

How AI Detects Emotions

There are a few techniques through which emotions can be detected using AI and involve much sophisticated techniques such as:

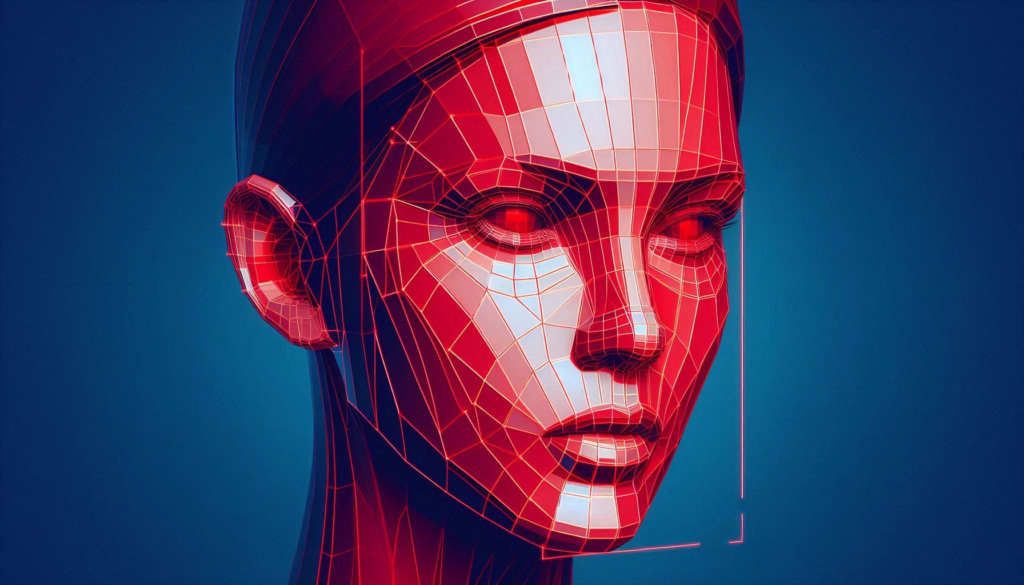

- Facial Expression Recognition: AI may use real-time facial expression features to determine the emotion such as happiness, anger, sad, or surprise. Deep learning applications like convolution neural networks (CNNs) are quite famous for facial landmark detection and micro-expressions analysis.

- Voice Analysis: Emotions are yet another variant represented in tones, pitches, and tempos. Audio signals carry tones of stress, excitement, frustration, and so on. For the most part, extracting emotional content from words spoken involves a hybrid model involving NLP and speech recognition models.

- Text Analysis: Through sentiment analysis, AIs may capture what emotions are behind the text. Learning from a customer’s post in social media, e-mail or reviews, learning machines can determine whether that sentiment has joy, anger, or even fear.

- Physiological Monitoring: Worn devices and sensors could track some of the physiological measures of emotional arousal, including heart rate or internal moisture in the skin. Using AI, this is processed to determine the emotional state of a human in real time.

Emotion Detection in Human-Computer Interaction

A number of changes are being brought about in human-computer interaction through emotion detection. A few important areas where AI-driven emotion detection enhances HCI are listed below:

- Emotionally Intelligent Systems: These systems are aware of the user’s mood and hence can respond correspondingly. Therefore, Siri or Alexa might change their tone of voice or suggest pleasing things that may calm down the person in stress or the user when he or she is sad. Such personalization makes the user experience more engaging and satisfying.

- Healthcare Applications: AI Healthcare applications are proving valuable, especially regarding the diagnosis and treatment of mental health issues. AI chatbots and virtual therapists can monitor what goes on in the minds of patients during engagement and help keep track of the progress of a patient or identify depression- and anxiety-related potential problems. There are early interventions made with at-risk individuals through remote monitoring systems that can track emotional patterns using wearable sensors.

- Customer Service and Support: It will revolutionize the face of customer service and response. The kind of analysis of the emotional tone in a customer inquiry, voice, or text would allow AI systems to route interactions toward the human agent when required or provide a customized empathetic response directed toward the right emotional tone of the customer. This would therefore have the overall effect of greater customer satisfaction and enable companies to maintain better customer relationships.

- Entertainment and Gaming: Emotionally responsive gaming systems would adjust the levels of difficulty, narratives, or what would happen in the game according to the emotional state of the player. It makes entertainment much more immersive and much more interesting. Streaming services would suggest content based on the mood of the viewer and therefore tend to promote a more interactive, personalized entertainment experience.

- Education and Training: The AI emotion detection system can fortify online learning platforms by identifying confusion, frustration, or disengagement taking place in a learner. The system can then provide more support, change the learning material, or report the instructors to help the learners at the right moment so that the result of learning is highly enhanced.

Future of AI Emotion Detection and Human-Computer Interaction

Soon, AI would move significantly in the detection of emotions and HCI. More advanced algorithms, better ways of data collection, and hardware will be improved to better and more accurately provide deeper insights into how humans feel.

Soon, it may stand at the center of many industries as varied as personalized marketing and education, to public safety and beyond. The avenues opened to creating more intuitive, empathetic, and human-centered interactions with the technology have thus multiplied as it continually improves.